How AI helped our content thrive in the fast lane

How well does AI understand human empathy? The Thrive Content team set out to discover how they could use Artificial Intelligence to craft compelling, human learning content.

Artificial Intelligence (AI) has been around long enough now that I should really stop thinking the letters A and I together always refer to me (Al) - but it still gets me!

If you're still reading after that joke, excellent. Grab a cuppa and get cosy, because those who know me know I like to natter - but I promise I’ll use AI to make this blog a lot shorter than my first draft. (And maybe even see what a cross between Tigger and Ruby Wax would look like…)

Okay, distractions over.

Why are we talking about AI?

As Chief Content Officer at Thrive, I'm always in search of new ideas, media types and formats of content. In line with this, I'm also interested in finding new behaviours to tackle while continuing to make an impact and deliver real credibility to people.

It couldn't come at a better time. Having recently unveiled our suite of innovative AI features - including an AI-powered WhatsApp coach and an AI authoring tool that creates content with the click of a button - here at Thrive, we've got AI on the brain.

So, with the informal introduction of AI tools to our every day, I wanted to challenge one thing:

Would a learning module created entirely by artificial intelligence (AI) be as effective, creative and credible as our human-designed learning content?

Additionally, could we save time and free up team resources? That’s where the experiment started…

Day 1

We needed a team that would embrace a variety of tools, some we’ve used before and others entirely new. Our Senior Brand Manager Simon is an internal SME (Subject Matter Expert) when it comes to AI design. His passion and deep understanding of the market and tools available (as well as how to get the best from them) was invaluable to this project, so he was first in.

I like to throw things out there and give everyone the chance to be involved… so a quick message in the Thrive Content Team group chat was next. The fastest fingers secured the job, kind of like a couple of contestants in the first round of Who Wants to be a Millionaire?

It took just 12 minutes to secure the resource, with Learning Designer Corrie and our Senior Designer Dan up for the challenge. Yep, that’s how we roll here, we don’t hang about.

Next up, we had to decide what content to work on. We firstly considered a range of compliance-related topics, as something more theory-based seemed to make the most sense when it came to AI. But we don’t play it safe at Thrive, do we? So, we thought of the most human topic possible…

Empathy.

That's when it got a whole lot more fun. Can machine learning algorithms produce content that requires real human thinking, feeling and connection?

We’ll soon find out.

Day 2

Kick-off call day. Getting together from across multiple departments, the team brought their research to discuss. The list of available tools was lengthy: Adobe Firefly, Adobe AI Enhancer, ElevenLabs, Pictory, Adobe Express, Runway, GTP-4, Google Bard, Pika, MidJourney... and the list goes on.

We agreed the best way forward was to test two tools for each task.

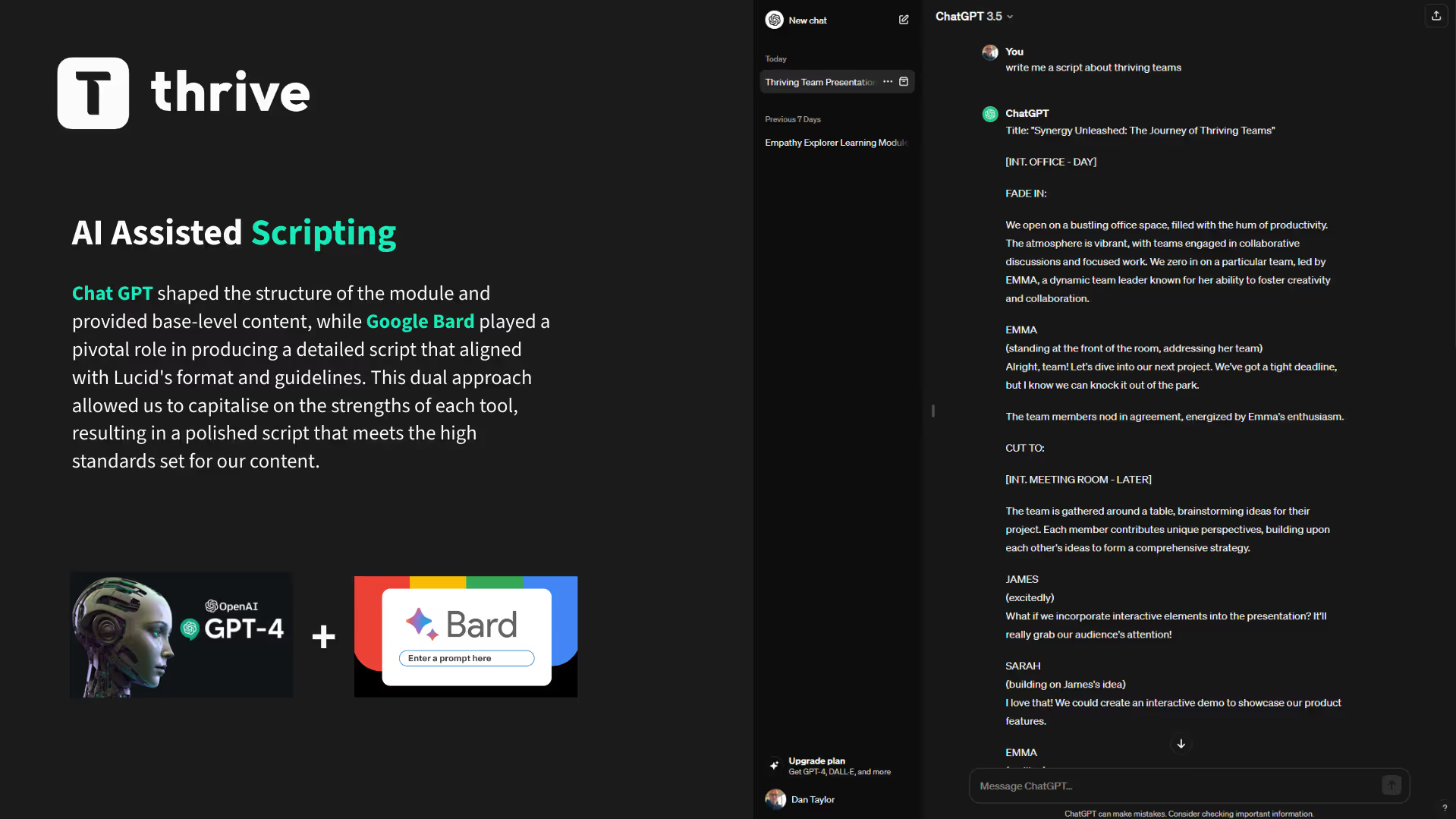

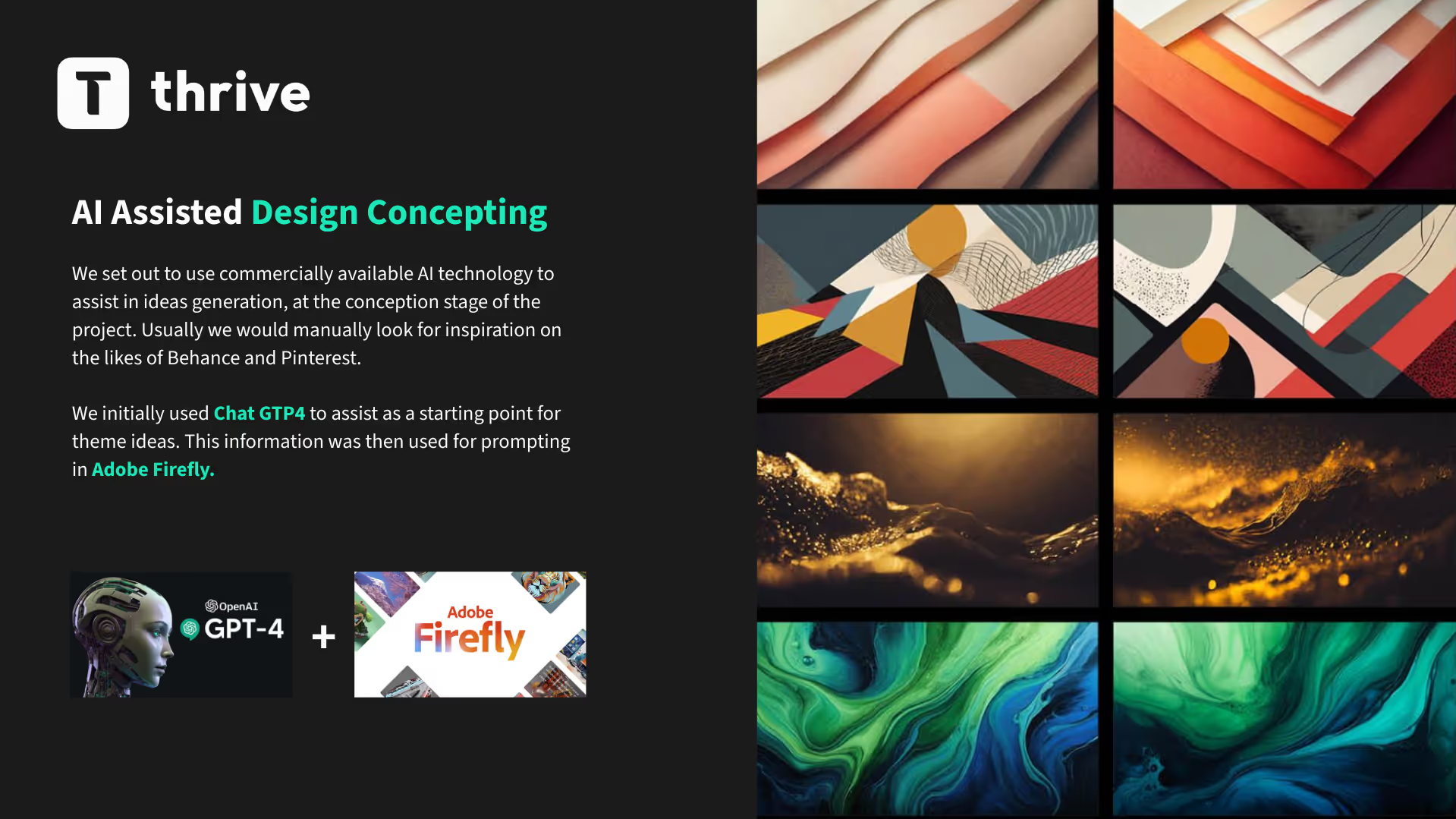

For example, Chat GPT and Google Bard would help with AI-assisted scripting, while GPT-4 and Adobe Firefly would serve the AI-assisted design conception.

Here’s a snapshot into what we planned:

Now, we’re not taking the human element away completely here. This experiment is to see how well AI tools could work to create content.

Something I was keen to get from this from the start, from a production perspective was:

From the start, I was keen to understand from a production perspective:

- What could we utilise going forward?

- How much more efficient could we be as a team if we could use tools to take away some of the time-consuming admin?

- What does the cost of these tools look like compared to the time saved?

This would allow more time for creative thinking and meeting with customers, as well as freeing up headspace for creative ideas.

Side note: I had Corrie’s one-to-one the same morning as our little project huddle, and he expressed how excited he was for this new project!

Day 3

The AI Creatives group chat was on fire!

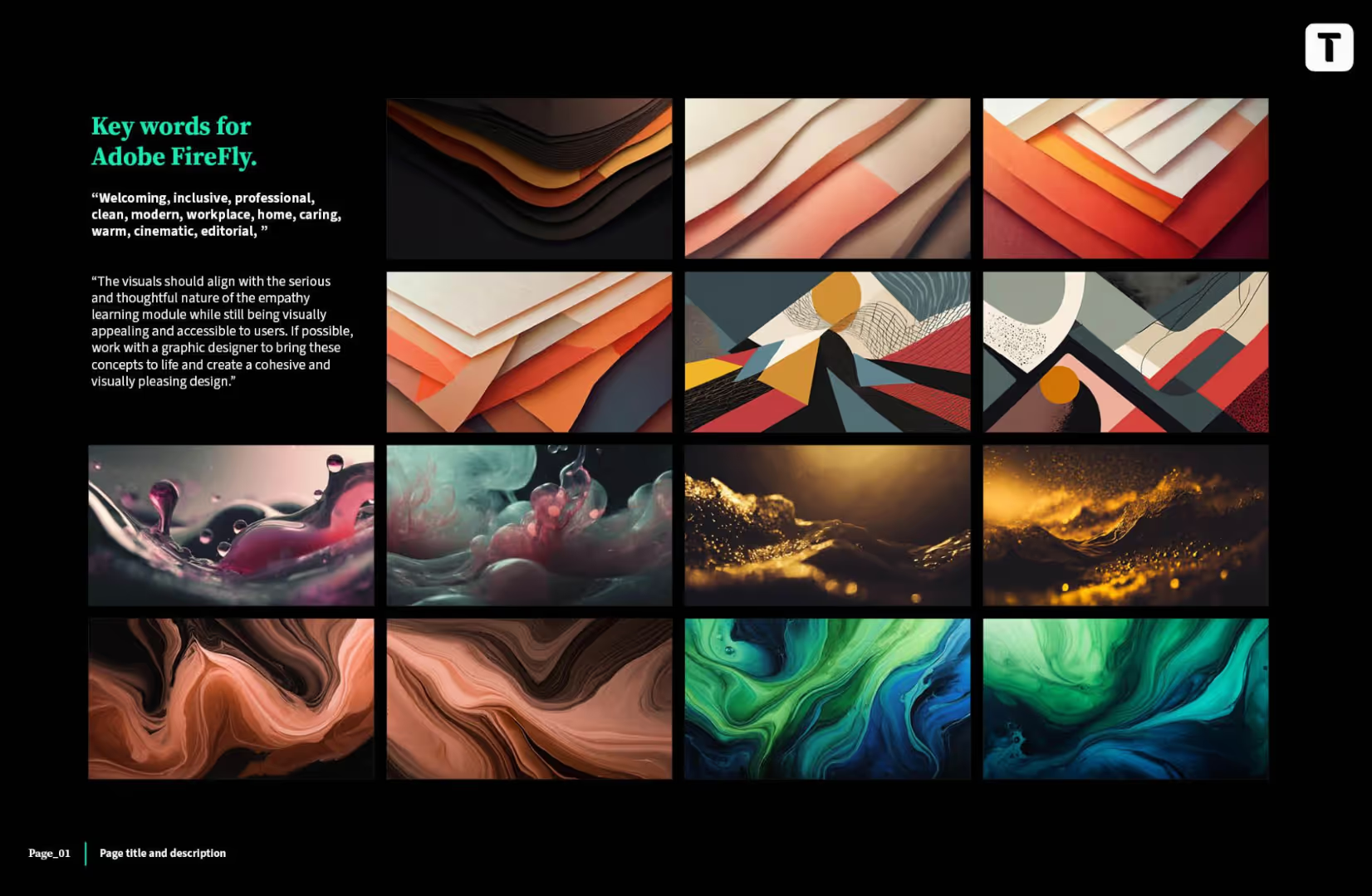

We got busy creating concepts, starting simple. The team used prompts in Chat GTP-4 such as:

“Create a concept image and design idea around the topic of empathy for a learning module, using serious scenarios that we’ll call Empathy for Others.”

It came back with descriptions, initial narratives, insights, reflections and more. Next, we used keywords and results from Chat GTP-4 in firefly to generate some early designs.

I had Dan’s regular one-to-one on the same day, and he had made some incredible progress. What stood out for me was the curiosity and excitement around working with AI. Some key points I took away from our conversation were:

- It got Dan thinking about how we can apply this to day-to-day tasks and save time.

- Having AI was like having a buddy to bounce ideas and information from.

- Adobe Firefly was proving to be a hit, getting clued up on prompts and tweaking outputs.

- It was elevating the richness of ideas.

In only three days, this had already been a really interesting and productive project with design concepts and visual storyboards created much faster due to the time saved researching inspiration.

Option three was the chosen one.

Day 4

We set out to create the build using our in-house authoring tool, Lucid. The script was generated entirely using a combination of Chat GPT and Google Bard, and using these tools meant research and scripting time was halved.

However, it’s not as smooth of a process as can be. Although zero content was written by Corrie, there were some tweaks and changes to the output of answers and a chunk of time was needed to reformat the copy into our best practice script templates ready for the build.

The team found that Google Bard was better for a more human tone and simple language.

The next task was to finalise the module using AI-generated imagery and introduction videos. We agreed that two different tools would be used to test out two different AI-generated video scripts: GPT-4 and Adobe Firefly.

So far, graphics time had not decreased hugely, but utilising AI helped so much with generating ideas to get the ball rolling.

However, Firefly was proving to be really useful to source specific imagery and B-roll. We believe this is going to be a really helpful tool for the team moving forward.

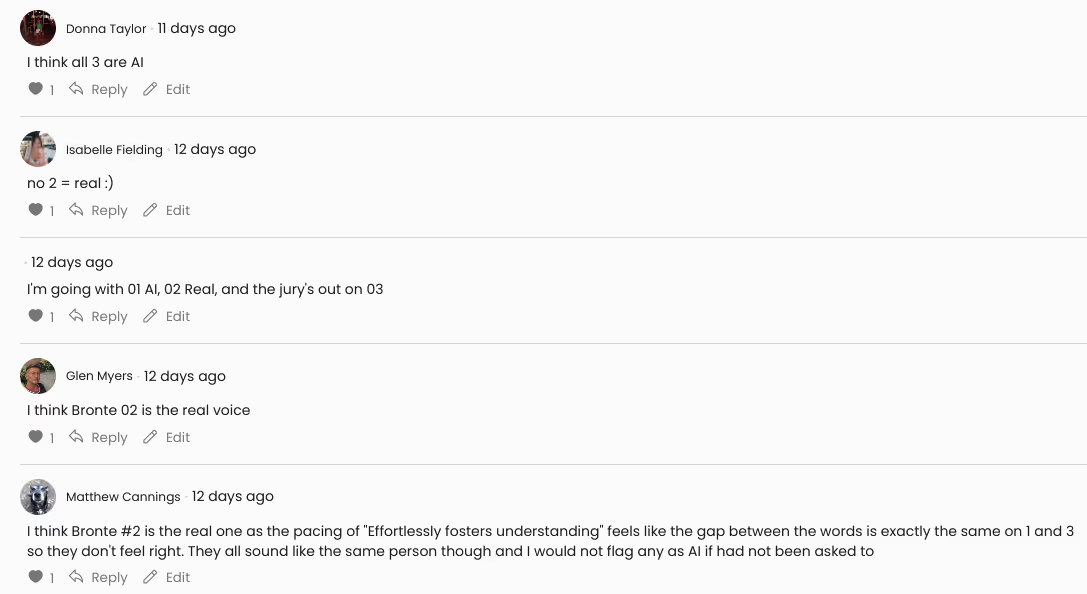

ElevenLabs, an AI voiceover generator and text-to-speech tool, was working really well. We hooked copywriter Brontë in to record a real voiceover and used a short sample for ElevenLabs to generate the same script. Now this was scary!

The results were so realistic, we decided to have an internal competition: “Guess Brontë's real voiceover” as wanted to put it to the test and see if anyone could work out who the real voice was. They couldn’t, and here are the comments to prove it.

AI was actually clip number 1.

Day 5

It’s alive!

The Understanding Empathy module is finished, and we kicked this project off just under 4 days ago.

But why stop there? We’re having too much fun.

The team decided they wanted to push it further. Now, can we create a video-based scenario? How about translations? What about audio only and creating a podcast?

Day 6

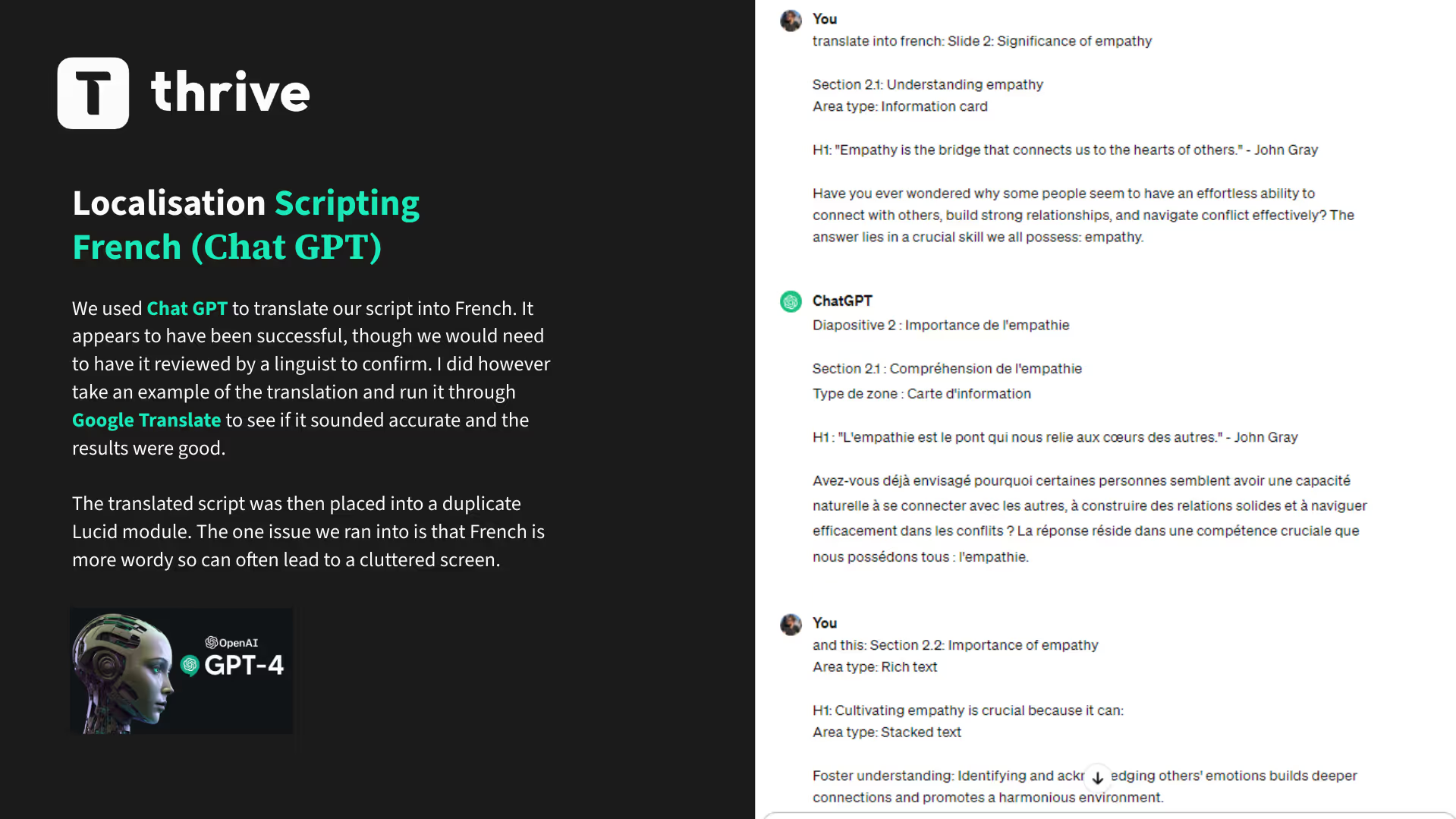

Who knew Brontë spoke fluent French? No one, because she can’t. However, with the help of GPT-4 and ElevenLabs, it sure made it sound like she could.

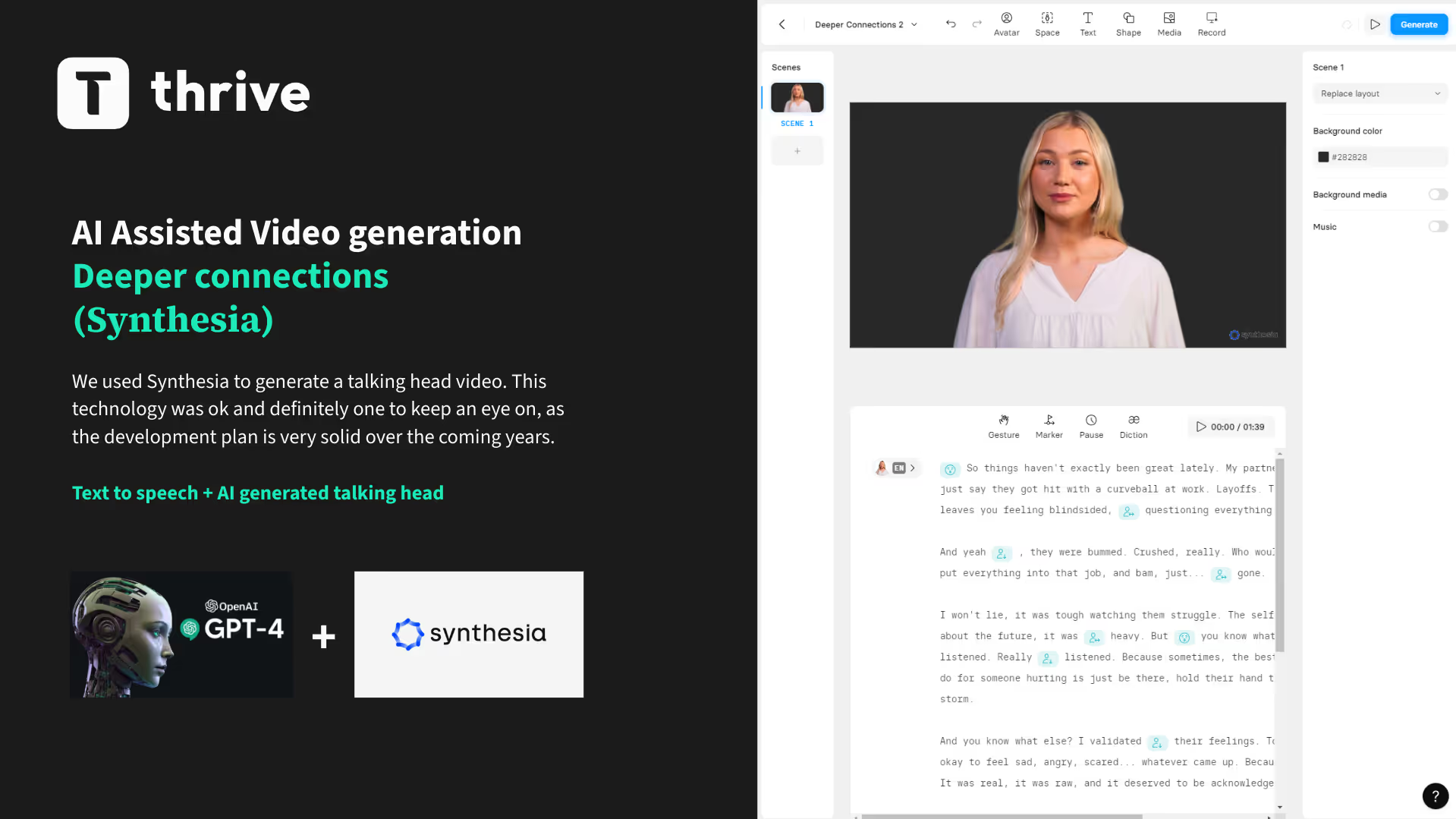

Meanwhile, Dan was working on an AI-assisted video generation, one using instructor-led video via Synthesia and the other using stock B-roll with Runway. Here’s what we found:

Now we have some additions to the original mix: a fully translated French version of the module, a talking head made completely with AI, and an audio story.

In my quest for content that's as flawless as a perfect espresso shot, I'm calling in the big guns.

Firstly, our French linguist is the ultimate guardian of all things chic and grammatically correct.

Next, to ensure our English module isn't just good but worthy of a standing ovation, we’re verifying it with an external Learning Specialist with the knack for spotting AI genius. It's like having a Michelin star inspector in your kitchen—terrifying but oh-so-validating. Because let's face it, in the world of learning, validation isn't just nice, it's essential.

Day 7 - "Chat GPT, round up this blog for me”

Seven days. Four resources. A whole lot of fun... and we’re not finished.

The team has kept the spark alive, and is continuing to push the boundaries of what’s possible. We're now looking at tools such as Dall-e, Veed.io and Invideo because tech keeps evolving and Thrive Content never stands still.

But what did we learn along the way?

- The main bulk of time saved is on scripting and copywriting.

- AI is definitely in our content toolkit. Some of the future features planned in these tools mentioned are going to be game-changing, and will minimise some of the challenges we have when working on very specific content.

- We shouldn’t be frightened of AI. I can’t see it taking over jobs anytime soon. Human intervention is very much still essential to ensure quality, humanity and accuracy.

- AI is capable of taking on human topics, but again, still requires validation.

- Utilising AI will allow us to continue to create great content whilst giving everyone more time to use their heads and hearts on the area of their roles most important to them: creativity.

- We have absolutely got to keep up with the tools and trends in AI - Bard has now been renamed Gemini - who saw that coming?!

Also, AI can be very amusing when glitching and we all know it’s definitely not perfect (yet), but until then who doesn’t love a random laugh at someone with seven fingers during the working day?

I hope you’ve enjoyed reading this project journey from my perspective. Keep an eye out as we’ll be sharing all the resources for free, so you can be the judge.

All my best,

Al (actual AL this time, not AI. But here is what AI thinks I look like…)